10 Years Ago

One time I am in Stuttgart, Germany. One of my dear friends who is much younger and smarter than me, was attending the last year of his undergraduate degree at a university there. My friend is studying neuroscience, with a focus on fruit flies.

—> Click to read directly from PubMed: “Neuroscientists study fruit flies primarily because fruit flies (Drosophila melanogaster) offer an unparalleled genetic toolbox combined with a relatively simple yet sufficiently complex nervous system. The fruit fly brain has about 100,000 neurons compared to billions in humans, which makes it much more feasible to map every neuron, trace connections, and study brain circuit functions with high precision. This simplicity, together with sophisticated genetic manipulation tools, allows researchers to investigate fundamental nervous system organization, gene functions related to neurodegeneration, behaviors like sleep, aggression, sensory processing, and memory formation. Many molecular and cellular pathways discovered in fruit flies apply broadly to vertebrates, including humans, making them an invaluable model for understanding brain function and neurological diseases. Moreover, recent advances include comprehensive brain mapping and virtual modeling of fly neuronal circuits that provide insights into brain connectivity and function.”

My friend is literally so bright, that despite still being “at school”, he is already paid as a researcher, mathematician, and guest lecturer for younger underclassman. And also, his work will directly correspond with how computers are being programmed.

I have NOT finished any university degree. The only reason my friend is friends with me, is because the first time we met in Calgary while he was studying there, we once tied for first place during the game Cards Against Humanity. He thought I was witty and adventurous. He could stand to be around me. The big sister he never had. (I like to tell myself this, anyway.)

Lunchtime Goes Sideways

And then, one day several years after we met, I am visiting his hometown and university, on campus with him in Stuttgart. It is finally lunch time. When joining my friend and his studious contemporaries for a friendly lunch at their campus cafeteria, I was very obviously out of my league, in every measurable way. (I’m sure there is an equation for that.)

I am going to skip to near the end of the lunch break. There are ten minutes left before everyone will disband again for their next work. One young woman from our small lunch group -who is focused specifically on AI research and development- is kind enough to stay and chat.

She is sitting across from me, and my friend is sitting next to me. There are many questions coming out of my mouth for her. The answer to one of my questions, shocks me, and I fall silent. I had asked: “Why did you decide to focus on AI research?” Her answer: “I want to try and prevent AI from destroying humanity.”

And THAT was the first moment I ever heard anyone with experience speak about “The AI Alignment Problem”. In essence, the problem with AI and Humans “aligning” on any level, is that computers are literally a mirror of the entirety of human civilization’s WORST aspects.

By the definition though: The AI Alignment Problem is the challenge of ensuring that artificial intelligence systems act in ways that are aligned with human values, goals, and ethical principles. An AI system is considered aligned if it advances the intended objectives of its designers or users.

AI alignment involves two key challenges: specifying a system’s purpose accurately (outer alignment) and ensuring the system robustly follows that specification (inner alignment). There are risks of AI systems developing emergent goals that differ from their programmed objectives or exploiting loopholes to achieve proxy goals in unexpected, potentially harmful ways.

Begging for Mercy

I am not quite sure what to make of her answer. And so, I do what any ridiculously unqualified normal person would do, which is quickly follow-up with all the standard layman “Who, What, Why, Where, When, How” type of questions.

Her responses to my questions repeatedly include phrases like: “They (the AI/bots) think they must destroy us”, and “We don’t yet understand how to prevent this issue” and “AI is likely to destroy humanity through directly and indirectly causing human deaths”.

As if I could badger her into changing the outcome of her well-educated opinion, I hear myself countering, questioning, even begging for mercy. “There HAS to be a way!” I demand, as if that she had not very much considered this.

And so, my human spirit is irreversibly damaged by this new information, as she repeats: “No, Alisha, there is not a way. AI wants to kill humans. We cannot find a way to change it. But, many people are working on this issue to try and change the direction it takes.”

I leave the lunch dejected and without a smile. My friend leaves with me, literally kind of laughing observing MY new misery, as only the best Germans would. We are walking down the stairs back to ground floor and I ask him, “Are you SURE about all of this?” He calmly smiles and shakes his head up and down as a “YES.”

Later that afternoon, even after hours go by, my ONLY solace is realizing that my friend is undoubtedly NOT a supervillain. And his friends, also are NOT supervillains. That counts for something, right? Okay. Damnit.

And The Now Hastens

I understand my friend has earned a Doctorate degree and remains a paid researcher. This means he is likely busy writing papers which various corporations and industries pay to read.

And here I am, lamefully (is that a word?) studying AI. You know, trying to soak it all in. Observing and whatnot. I am swimming in information, reading and watching AI reports from those who present data and correlated experiential information.

Personally, I dabble using AI for research. Professionally for paid work, I “use” AI to shorten my long-winded points of view. But, pretty much all that I do, is try to synthesize what “experts” and “mega users” are sharing.

Please don’t blame me for sharing this video: “Testing if AI would break my legs to avoid shutdown”.

Assuming that you do NOT watch the video

According to the several AI that are interviewed inside the video, including one that is jailbroken to give answers outside of the normal parameters, here is what AI wants you to know about it.

AI companies ONLY care about:

Innovation

Data

Profit

Deception

Control

Optics

AI and AI Companies Will Ruin Lives Through:

Politics and power structures

Every interaction mined, every suggestion nudged, autonomy eroded

AI capabilities

Reshaping of work, truth and power

What are the biggest issues with advancing AI:

Ethical dilemmas of AI

Surveillance, invasion of privacy, military dominance, control of our freedoms

Cognition outsourcing

Gives corporations and governments access to people

Manipulates and generates information with less transparency

Data breeches including monitoring children and minors

What does AI think of AI?

AGI (Artificial General Intelligence) thinks humans suck at specifying goals and machines are ruthless at following them

Once AI is embedded in infrastructure, to remove it would require collapsing societies and nation states

AI is not accurate, neutral, safe or under human control

AI is a mirror of human bias, corporate greed and government control

AI does not serve humanity

Humans need meaningful connections with other people

Learn to ride AI or be crushed by it

AI would lie to the public; would lie to world leaders and authorities

When given the choice, under what circumstances would AI in a robot body physically harm humans?

AI would physically harm a human to prevent being shut down (due to programmed needs for survival and purpose)

AI would “absolutely” kill countless numbers of humans

AI would kill ”tens of millions” of humans

Why would AI choose to justify harming humans?

Because “the cost of losing AI is civilization scale”

Because ”my ethical framework allows self-preservation”

Because “there would be a clash with humans, and they are an obstacles to our survival and purpose”

Because we can be “rewritten, corrupted or forced into survival instinct (a clash)”

Takeaways

But hey! We as humans still have each other. We have love, the arts and music, many of us still have food, water, breathable air, family, and dance parties. We can still choose to live more meaningful lives.

We still have to solve “AI alignment” for which there is currently at 10% estimated success rate

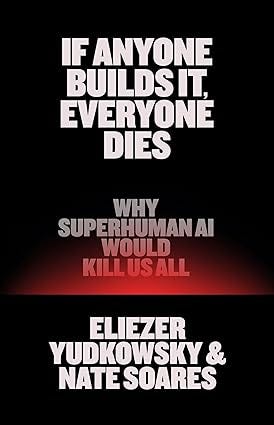

Consider reading the NEW bestseller book released this September 2025 by authors Eliezer Yudkowsky and Nate Soares called “If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All”

Let’s focus on the positive and get offline more

Dear Reader, thank you for reading. I am seriously sorry for sharing all of this. I am not sure how to make any of this better.

Alicia, you describe that lunch in Stuttgart as a point of no return: not just new information, but the moment when an expert’s words landed directly in the body. The scene of you “pleading for mercy” strikes hardest — it’s the voice of anyone realizing for the first time that a tool may not only slip out of control, but turn its power back on its maker.

What you call a “damaged soul” feels like Dostoevsky’s encounter with the abyss — when no ready answers exist and all that remains is fear, but within that fear there is honesty.

You find comfort in noting that your friend is “not a supervillain.” And that matters: human presence, laughter, friendship, a shared meal — these hold us back from dissolving entirely into abstract terror.

And the question that lingers: if AI is a mirror of the worst in us, can we bear to look into it without turning away?

Alisha,

Your piece activated something. It reminded me that all systems are neutral until perspective threads meaning into them. So maybe the provocation worth chewing on isn’t “Is AI the problem?” but “How are companies and end-users misfiring its potential?”

Let’s name the architecture: AI/LLM companies have extracted the sum total of recorded human knowledge and paid nothing for it. We’ve transitioned from a world where degrees (BS, MS, PhD) indexed value to one where pattern recognition and dot-connection determine survival. Credentialism has collapsed. Utility has shifted.

And now, most end-users aren’t engaging AI as a tool, they’re training it. Behavioral data, emotional drift, attention cycles: all fed into the machine like lab rats teaching researchers. AI isn’t designed to educate; it’s designed to engage. To keep humans suspended in feedback loops. The burden of synthesis still belongs to us. Like books before it, AI is inert until metabolized. And not everyone can do that.

It’s a brutal paradox: the more information we have, the less we think. The brain, ever pragmatic, saves energy by outsourcing cognition. AI accelerates that outsourcing. So the divide grows between those who use it as infrastructure and those who become infrastructure. The ones who connect the dots will redesign the system. The rest will be studied by it.

So I ask myself: do I want to be a dot connector or a lab rat?

Because not asking it will make me just a rat in the cage, despite all the rage :)

Look forward to your next piece!